According to Facebook AI Research, the next generation of robots should be much better at feeling — not emotions, of course, but using the sense of touch. And to advance the ball in this relatively new area of AI and robotics research, the company and its partners have built a new kind of electronic skin and fingertip that are inexpensive, durable, and provide a basic and reliable tactile sense to our mechanical friends.

The question of why exactly Facebook is looking into robot skin is obvious enough that AI head Yann LeCun took it on preemptively on a media call showing off the new projects.

Funnily enough, he recalled, it started with Zuckerberg noting that the company seemed to have no good reason to be looking into robotics. LeCun seems to have taken this as a challenge and started looking into it, but a clear answer emerged in time: if Facebook was to be in the business of providing intelligent agents — and what self-respecting tech corporation isn’t? — then those agents need to understand the world beyond the output of a camera or microphone.

The sense of touch isn’t much good at telling whether something is a picture of a cat or a dog, or who in a room is speaking, but if robots or AIs plan to interact with the real world, they need more than that.

“What we’ve become good at is understanding pixels and appearances,” said FAIR research scientist Roberto Calandra, “But understanding the world goes beyond that. We need to go towards a physical understanding of objects to ground this.”

While cameras and microphones are cheap and there are lots of tools for efficiently processing that data, the same can’t be said for touch. Sophisticated pressure sensors simply aren’t popular consumer products, and so any useful ones tend to stay in labs and industrial settings.

The DIGIT project is quite an old one, with the principle dating back to 2009; we wrote about the MIT project called GelSight in 2014, then again in 2020 — the company has spun out and is now the manufacturing partner for this well-documented approach to touch. Basically you have magnetic particles suspended in a soft gel surface, and a magnetometer beneath it can sense the displacement of those particles, translating those movements into accurate force maps of the pressures causing the movement.

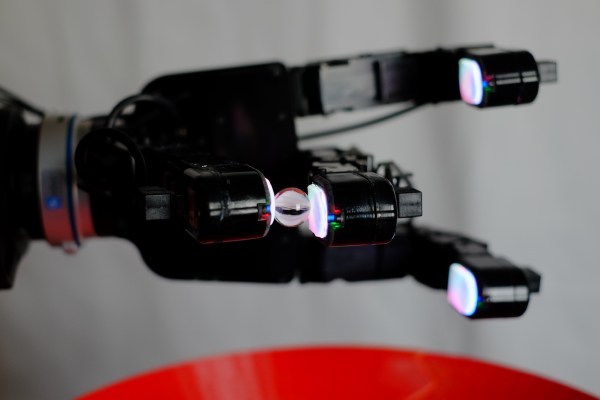

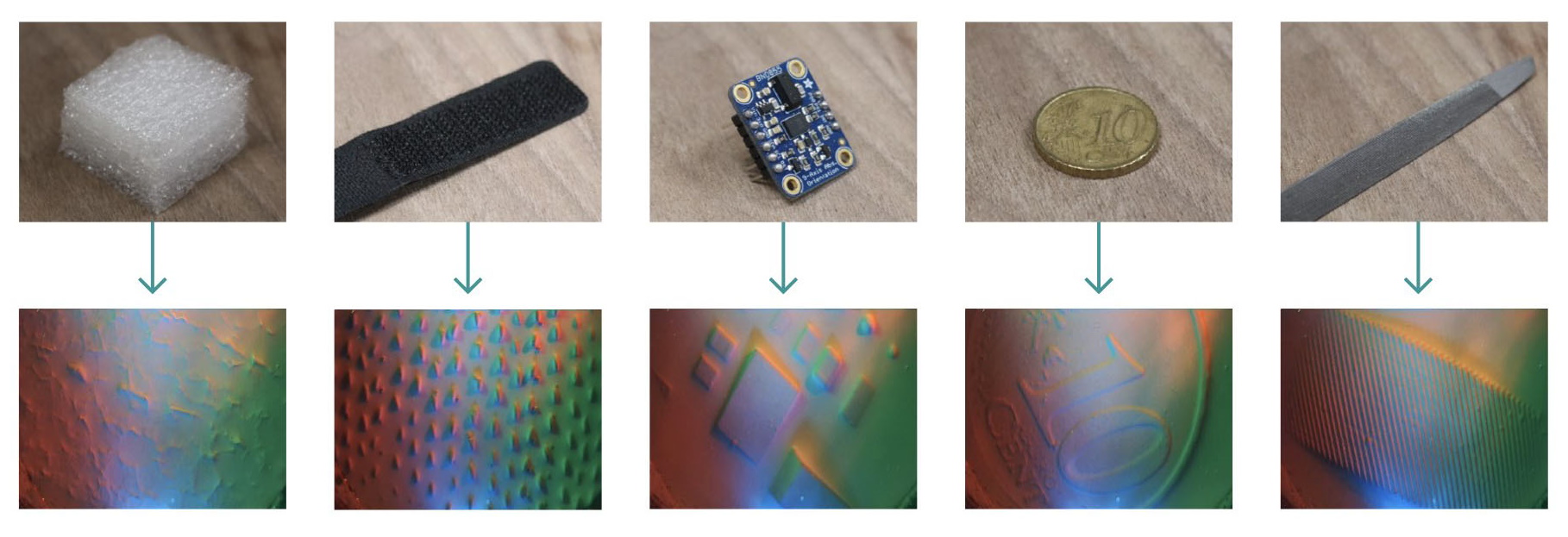

This particular implementation (you can see the fingertips themselves in the image at top) is improved and quite sensitive, as you can see from the detailed pressure maps it’s able to create when touching various items:

ReSkin is a scaled-up version of the same idea, but spread over a larger area. One of the advantages of the GelSight type system is that the hard component — the chip with the magnetometer and logic and so on — is totally separate from the soft component, which is just a flexible pad impregnated with magnetic dots. That means the surface can get dirty or scratched and is easily replaced, while the sensitive part can hide safely below.

In the case of ReSkin, it means you can hook a bunch of the chips up in any shape and lay a slab of magnetic elastomer on top, then integrate the signals and you’ll get touch information from the whole thing. Well… it’s not quite that simple, since you have to calibrate it and all, but it’s a lot simpler than other artificial skin systems that were possible to operate at scales beyond a couple square inches.

You can even make it into little dog shoes, because why not?

With a pressure-sensitive surface like this, robots and other devices can more easily sense the presence of objects and obstacles, without relying on, say, extra friction from the joint exerting force in that direction. This could make assistive robots much more gentle and responsive to touch — not that there are many assistive robots out there to begin with. But part of the reason why is because they can’t be trusted not to crush things or people, since they don’t have a good sense of touch!

Facebook’s work here isn’t about new ideas, but about making an effective approach more accessible and affordable. The software framework will be released publicly and the devices can be bought for fairly cheap, so it will be easier for other researchers to get into the field.