Apple has announced a range of accessibility features that are designed for people with mobility, vision, hearing, and cognitive limitations. These features will be available through software updates later this year. One of the most interesting features is the Apple Watch allowing people with limb differences to navigate its interface using AssistiveTouch. Users on iPhone and iPad will also be a part of the new accessibility-focussed treatment. Additionally, Apple has announced a new sign language interpreter service called SignTime that will be available to communicate with AppleCare and retail customer care.

AssistiveTouch on watchOS will allow Apple Watch users to navigate a cursor on the display through a series of hand gestures, such as a pinch or a clench. Apple says the Apple Watch will use built-in motion sensors like the gyroscope and accelerometer, along with the optical heart rate sensor and on-device machine learning, to detect subtle differences in muscle movement and tendon activity.

New gesture control support through AssistiveTouch would let people with limb differences to more easily answer incoming calls, control an onscreen motion pointer, and access Notification Centre and Control Centre — all on an Apple Watch — without requiring them to touch the display or move the Digital Crown. However, the company has not provided any details about which Apple Watch models will be compatible with the new features.

In addition to the gesture controls on the Apple Watch, iPadOS will bring support for third-party eye-tracking devices to allow users to control an iPad using their eyes. Apple says compatible MFi (Made for iPad) devices will track where a person is looking on the screen to move the pointer accordingly to follow the person’s gaze. This will work for performing different actions on the iPad, including a tap, without requiring users to touch the screen.

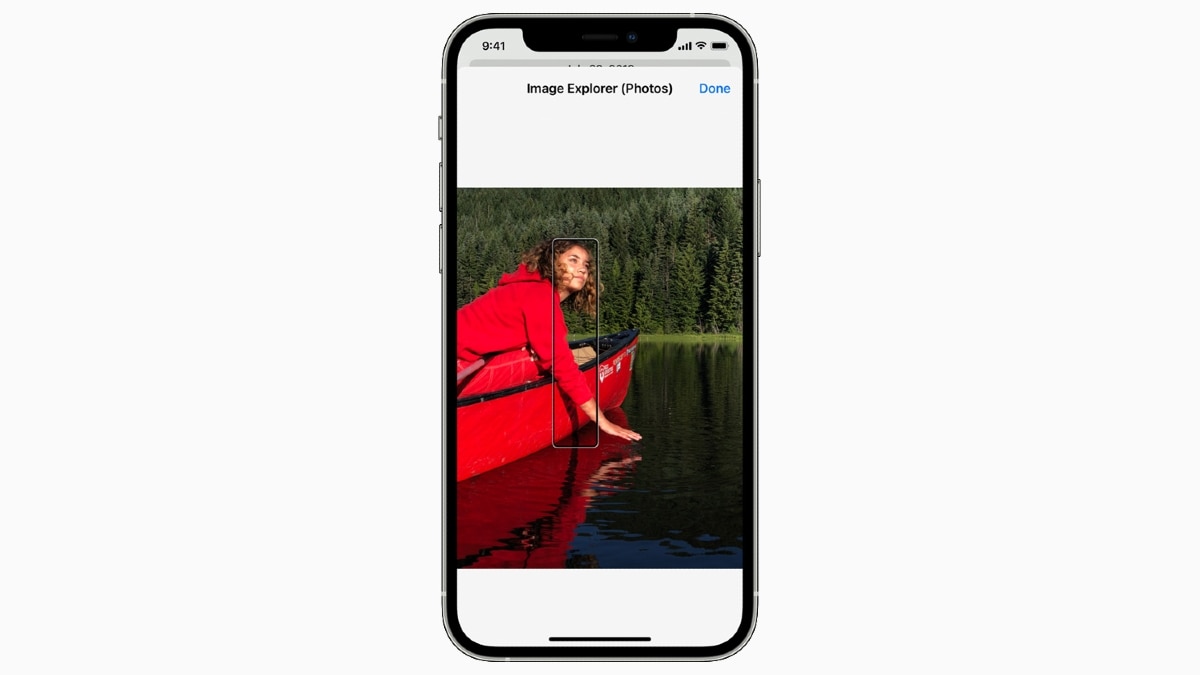

Apple is also updating its preloaded screen reader — VoiceOver — with the ability to allow people to explore more details in images. These details will include text, table data, and other objects. People will also be able to add their own descriptions to images with Markup to bring a personalised feel.

Apple is updating VoiceOver with the ability detail more about images

Photo Credit: Apple

For neurodiverse people or anyone who is distracted with everyday sounds, Apple is bringing background sounds such as balanced, bright, and dark noise, as well as ocean, rain, and stream sounds that will continue to play in the background to mask unwanted environmental or external sound. These will “help users focus, stay calm, or rest”, Apple said.

Apple is also bringing mouth sounds such as a click, pop, or “ee” sound to replace physical buttons and switches for non-speaking users with limited mobility. Users will also be able to customise display and text size settings for each app individually. Additionally, there will be new memoji customisations to represent users with oxygen tubes, cochlear implants, and a soft helmet for headwear.

Apple’s memoji customisations will get cochlear implants, oxygen tubes, and a soft helmet for headgear

Photo Credit: Apple

Alongside its primary software changes, Apple is adding support for new bi-directional hearing aids to its MFi (Made for iPhone) hearing devices programme. The next-generation models from MFi partners will be available later this year, the company said.

Apple is also introducing support for recognising audiograms — charts that show the results of a hearing test — to Headphone Accommodations. It will allow users to upload their hearing test results to Headphone Accommodations to more easily amplify soft sounds and adjust certain frequencies to match their hearing capabilities.

Users have not been provided with any concrete timelines for when they can expect the new features to reach their Apple devices. However, it is safe to expect that some details should be announced at the Apple Worldwide Developers Conference (WWDC) next month.

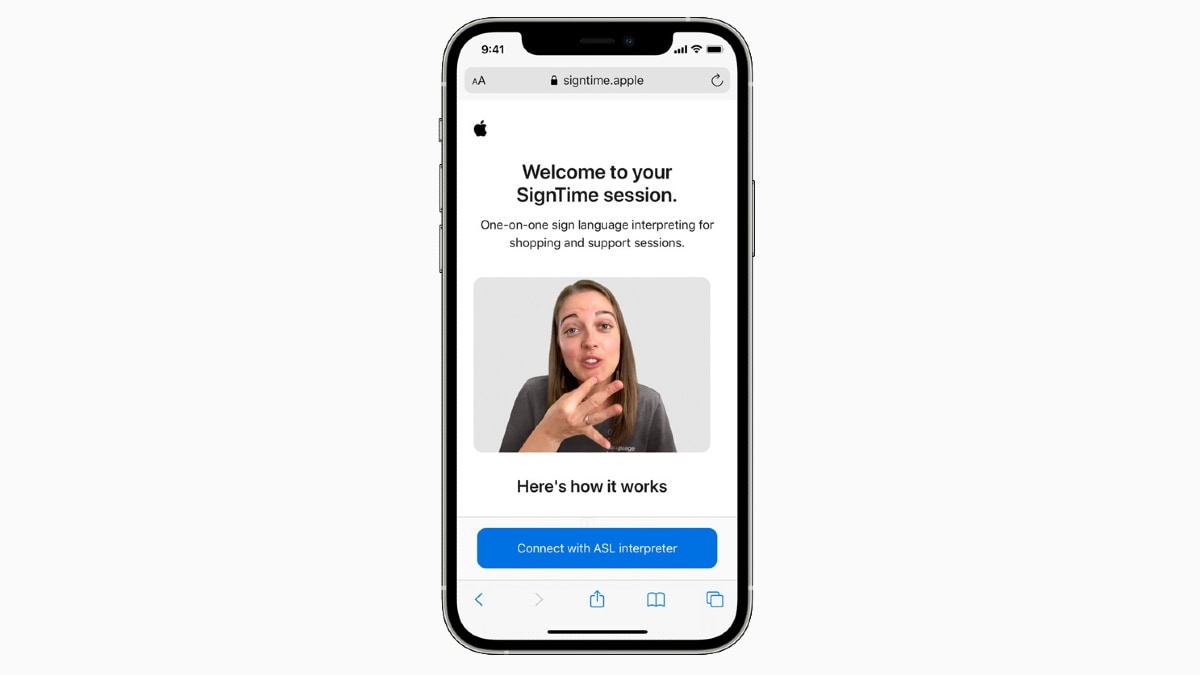

Apple will also be launching SignTime service for communicating with AppleCare and retail customer care using American Sign Language (ASL) in the US, British Sign Language (BSL) in the UK, and French Sign Language (LSF) in France directly from a Web browser. It will also be available at physical Apple Stores to remotely access a sign language interpreter, without requiring any prior bookings.

Apple is introducing SignTime sign language interpreter service for easy communication with service staff

Photo Credit: Apple

The SignTime service will initially be available in the US, UK, and France starting May 20. Apple does have plans to expand the service to other countries in the future, though details on that front may be revealed at a later stage.